Software Defined Vehicles

EU AI Regulation

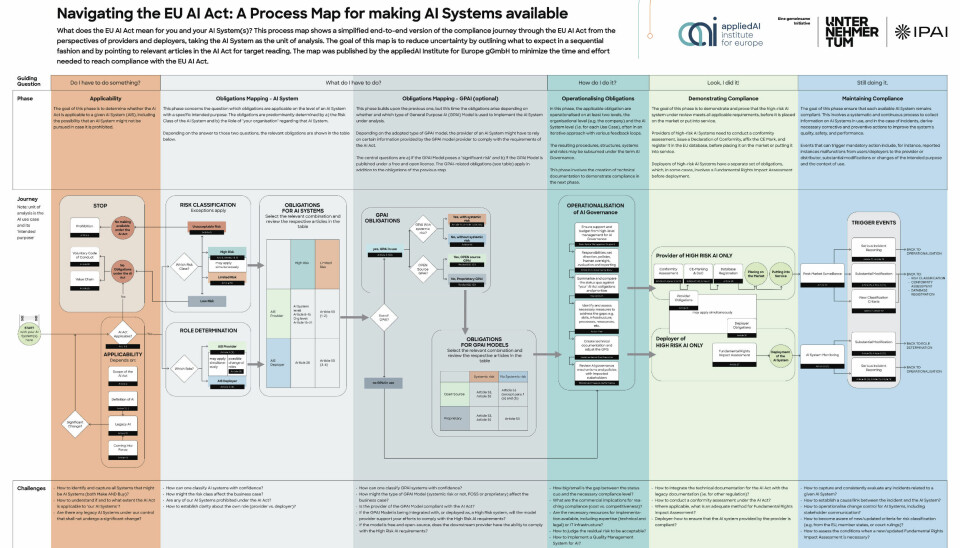

Process Map for making AI Systems available

The EU AI Regulation raises crucial questions, especially for companies in the automotive industry. The following article navigates through the key phases of the compliance process and highlights the specific challenges.

The EU AI Regulation aims to create a legal framework for artificial intelligence and applies to providers and users of AI systems within the EU.

Why do companies need to engage with the EU AI Regulation?

For companies in the industry & automotive sector, this question is usually answered with a clear "Yes". Whether it is AI-controlled production facilities, autonomous vehicles, quality control systems, predictive maintenance, or AI components integrated into products - the applicability of the regulation must be carefully examined. The first phase of the compliance process, "Applicability", therefore requires a detailed analysis of the AI systems used and developed to determine whether and to what extent the regulation applies. It is crucial to understand whether your company acts as a provider, user, or both, and what geographical reach your AI applications have. The challenge is to get a clear idea of which AI systems fall under the regulation and which do not.

How can the risks of AI applications be identified and assessed in detail?

A central aspect of the EU AI Regulation is the risk assessment of AI systems. The "Risk Classification" phase is crucial, as the obligations arising from the regulation largely depend on the risk category of the respective AI system. For the industry & automotive sector, high-risk AI systems are particularly relevant, such as those used in safety-critical areas (e.g., driver assistance systems, control of industrial plants) or those that could potentially affect fundamental rights. The challenge lies in identifying and managing the different types of risks associated with AI systems. This requires a deep understanding of the functioning of AI systems, their potential sources of error, and the consequences that could arise in the event of a failure. A careful risk analysis considering the specific contexts of use for companies in the industry & automotive sectors is essential.

What specific obligations arise for AI systems and how are these determined?

Based on the risk classification, companies must identify the specific requirements that their AI systems must meet in the phase of "Obligations Mapping - AI Systems". For high-risk AI systems, these may include requirements for data governance, technical documentation, transparency, human oversight, accuracy, robustness, and cybersecurity. In the industry & automotive sector, where precision and reliability are of utmost importance, these obligations gain particular relevance. The challenge is to ensure that the AI systems meet the relevant requirements (e.g., transparency, accuracy, robustness) and to manage the complexity of legal requirements, understanding and fulfilling all relevant obligations. A detailed examination of the articles of the EU AI regulation and deriving specific measures for one's own AI applications are necessary for this.

How do companies operationalise effective AI governance?

Compliance with the EU AI regulation is not a one-time task but requires continuous effort. In the phase of "Operationalization of AI Governance", companies must create internal processes and structures to ensure compliance with the regulation on an ongoing basis. This includes assigning responsibilities, implementing control mechanisms, conducting internal audits, and training employees. For companies with complex organisational structures and established quality management systems, it is important to seamlessly integrate AI governance into these existing structures. The challenge is to establish effective internal governance and responsibilities for AI systems and to ensure that the necessary resources (e.g., personnel, expertise, budget) are available to meet the requirements of the regulation.

How do companies ensure the ongoing compliance of AI systems?

After implementing the necessary measures, the phase of "Maintaining Compliance" is crucial. This includes continuous monitoring of AI systems, recording and handling incidents, regularly reviewing technical documentation, and adjusting compliance measures to new insights and changing regulatory requirements. Especially in dynamic industries like the automotive industry, where technologies and applications evolve rapidly, continuous engagement with AI compliance is essential. The challenge is to ensure that the latest developments in requirements and guidelines for the EU AI Regulation are met and that an internal process for assessing and handling incidents related to AI systems is established and maintained.

What overarching challenges must be overcome in AI compliance?

In addition to the specific challenges in the individual phases, there are overarching aspects that companies must consider in AI compliance. These include:

- The complexity of legal requirements and the need to translate them into technical and organisational measures.

- The need for specialised expertise in AI, law, and compliance.

- The integration of AI compliance into existing management systems (e.g., quality management according to ISO 9001, Automotive SPICE).

- The potential impact of the regulation on business models and innovation processes.

- The necessity to provide proof of compliance to supervisory authorities.

- Ensuring complete and correct technical documentation.

- Conducting relevant training and awareness measures for employees.

By addressing these phases and challenges early on, companies can take the necessary steps to meet the requirements of the EU AI regulation and responsibly harness the potential of AI technologies. The graphic provided by the appliedAI Institute for Europe, UnternehmerTUM, and IPAI serves as a valuable "Process map for making AI systems available".