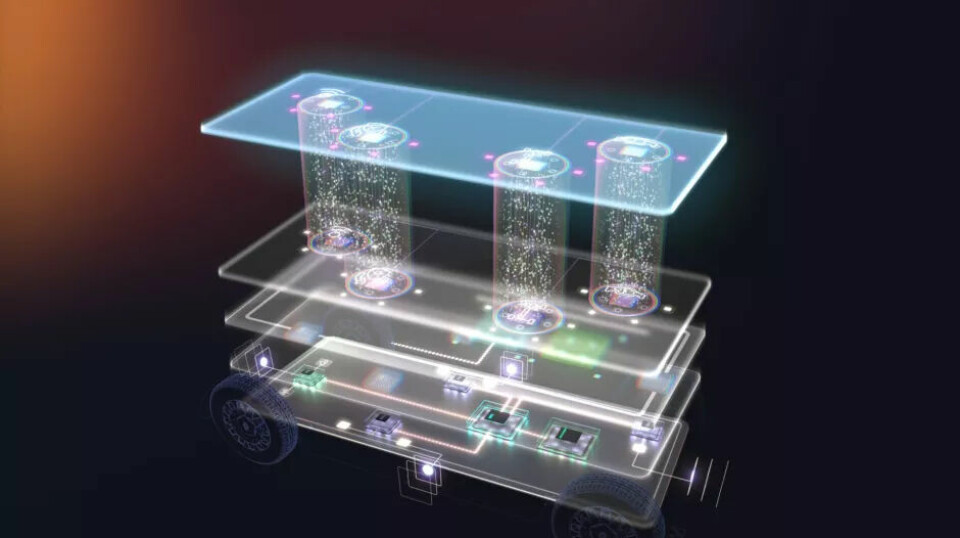

Software Defined Vehicles

Use of AI

These are the data architectures BMW and Kuka rely on

The use of GenAI accelerates the availability of information. However, it also requires a different data quality and availability. Only in this way can AI knowledge systems be created on one's own data that do not hallucinate.

"We are intensively engaged with the use of generative AI in all business processes," reports Michael Conrad, responsible at BMW for Artificial Intelligence, Process Intelligence, and Process Automation, specifically for the enterprise IT scopes. For this purpose, alongside off-the-shelf solutions and highly specialised bespoke applications for specific use cases, the self-service platform BMW Group AI Assistant has also been in use for several months. It is available to all employees to integrate AI into their daily work.

Various connectors are used to utilise knowledge from diverse sources such as SharePoint, the group document management system, as well as Confluence and Jira. For integration, low-code/no-code tools can be used to integrate individual codes or logic into the AI process and to create and use interfaces to other IT systems. "It is a true self-service, allowing employees - even without programming skills and deep AI knowledge - to configure and use AI apps and agents, both for personal use and for general use within BMW," says Conrad.

Chatting with the data

The AI apps are available in an app store. At the same time, an agent register is being set up to maintain an overview and ensure reusability in the age of Agentic AI with multi-agent systems. Since the end of last year, generative AI has also been used for the BMW and Mini assistant, through which customers can ask vehicle-related questions 24-7, which are answered by a virtual agent. "This naturally relieves our traditional communication channels with customers and increases customer satisfaction because there is no need to make extra calls or search for information for a long time," says Conrad.

"We have been working for many years to make corporate data more accessible and usable," reports Claudia Diedering, responsible for data platforms at BMW. The establishment of a central data lake in AWS about five years ago was an important step to orchestrate and curate the data in one place for various departmental processes. "In the last two years, we have further developed the architecture from a pure data lake to a data lakehouse in partnership with Snowflake," says Diedering.

“With GenAI, we are in a transformation with enormous potential, because thanks to GenAI, practically everyone in the company can access data in their natural language: with very high automation potential through so-called agent systems,” states Claudia Diedering. In the first step, employees can today query company data in natural language via “Chat your Data,” for example, the results of the previous day from production.

The key lies in the meta-data

The declared goal is to broaden the technology. “This requires a major change in the topic of data and data architecture. The GenAI agents can only deliver good results on high-quality data that is also provided with high-quality meta-data,” explains Diedering. A central topic is therefore the development of a BMW Group ontology. “Only by providing the data with information through metadata about what they exactly mean and linking the data together, will it be possible for AI to find and process this data meaningfully, as well as to reduce or avoid hallucinations,” says the expert. Data also resides in SAP and the Microsoft Azure environment, which come together in the BMW Group ontology.

"Especially in data architecture, it is particularly important to us to use open formats - and that providers offer open formats so that we can easily exchange data across multiclouds," states Michael Conrad. "By logically connecting data in a corporate ontology, we will in the future have the opportunity to decentralise data more and simply leave it at the source instead of copying it elsewhere," Diedering also notes. This saves IT and cloud costs.

The OEM continuously evaluates the GenAI solutions on the market, such as Microsoft Copilot or SAP Joule. "We are observing the market very intensively because we see a high level of dynamism in the AI environment there," reports Conrad. The focus is on maintaining the highest possible flexibility through internal competencies, in-house developments, and integrations, rather than committing to a specific technology stack or AI provider. From BMW's perspective, this strategy has already proven successful.

Teaching robots in simple language

Robotics manufacturer Kuka is also working on building its own knowledge systems based on LLMs to provide information more quickly. "The Kuka GPT makes knowledge in the intranet more accessible to all employees. Regardless of the language in which the content is maintained, you always receive the corresponding feedback in your native language," reports Christian Schwaiger, Technology Strategy Lead at Kuka. For robotics specialists, LLMs have disruptive potential not only for processes but also for the product: They ensure that robots can be commissioned and taught in natural language, without the need for specific knowledge in robot programming. Agentic AI is already being used for this purpose.

The data comes from a wide variety of data sources. Until now, information has mainly been processed via traditional dashboards, with interpretation left to humans. "GenAI changes the way we handle data because I now have a tool that helps me interpret this data: This is one of the biggest changes," notes Christian Schwaiger. It is possible to ask questions about information that is available, for example, in the form of text, audio, or video.

AI must be clearly identifiable

According to Schwaiger, LLMs would expand the data landscape and data architectures at Kuka, but not fundamentally change them: "We started building a data lake with a corresponding lakehouse approach several years ago to learn from historical data, for example, for predictive maintenance. Processing unstructured information is therefore nothing new for us in the data landscape." In the products around data processing on the market, it is noticeable that many providers are expanding their functionality with LLMs and functions increasingly overlap. When a generative AI solution is built today, a search in the vectorised data always starts from a vector database: The classic approach of Retrieval Augmented Generation (RAG), where information collection is combined with an LLM.

To transfer GenAI into safe industrial use, certain architectural aspects must be fulfilled. At Kuka, they rely on grounding the LLM with their own data in the RAG approach to largely avoid AI hallucinations. Additionally, a toolkit with best practices for building a secure AI solution is used for each implementation. Guidance helps in checking whether a solution from a provider or from in-house software development is secure. "It is crucial that everyone - software developers, administrators, users - knows the risks and strengths of AI," notes the Kuka expert.

A strong but lean governance must ensure that it is clear where which type of AI is contained, how it works, and how it arrives at its result. These aspects are also required by the EU AI Act. Kuka relies on a comprehensive Enterprise Architecture Management, in which every new software solution, including every AI application, undergoes a review and approval process. Additionally, an AI Council deals with highly critical cases.

Reusability is key

"With regard to the use of Agentic AI, it should be ensured that there is reusability: Agent systems have the advantage that various special functions can be flexibly interconnected for different application scenarios," explains Schwaiger. Clear governance is also crucial for data consistency: "I need to know where the data is used, whether the data is accurate, whether they are copies or referenced data from the source system with which I interact."

The most important aspects are therefore: technological reuse, data reuse, secure AI, but also secure access to the data. An AI system must not lead to employees suddenly accessing data that they do not have access to in the actual systems. "The permissions of the respective user must naturally be reflected in the data on the one hand in the respective system, but then also in the knowledge system," says Schwaiger.

This article was first published at automotiveit.eu